My Personal Methodology To Answering Any Time-Complexity Problem

You Will Never Struggle To Answer Any Asymptotic Complexity Question Again!

During the course of my corporate journey, I have interviewed many candidates for various software engineering roles. Among the many topics I cover, time complexity, asymptotic analysis, and worst-case analysis are the ones where candidates often struggle the most.

The real kicker is that most of these candidates are often highly proficient in advances topics like Algorithm & Data Structure Design, Database Systems, and Computer Network Algorithm. But it is often the strong basics of a candidate that make or break the case for them.

But why so many candidates struggle to analyse the complexity of a piece of code? In my opinion, it is because they over-complicate the process.

Candidates usually tries to perform pattern matching rather than an actual complexity analysis. They tend to evaluate the code based on familiar algorithms and their complexities. However, these candidates struggle significantly when the code doesn’t fit into a known pattern and requires a fresh analysis.

In this blog, I will talk about the methodology I would follow when answering such questions in an interview. As an added bonus, we will specifically talk about answering complexity questions around recursive algorithms.

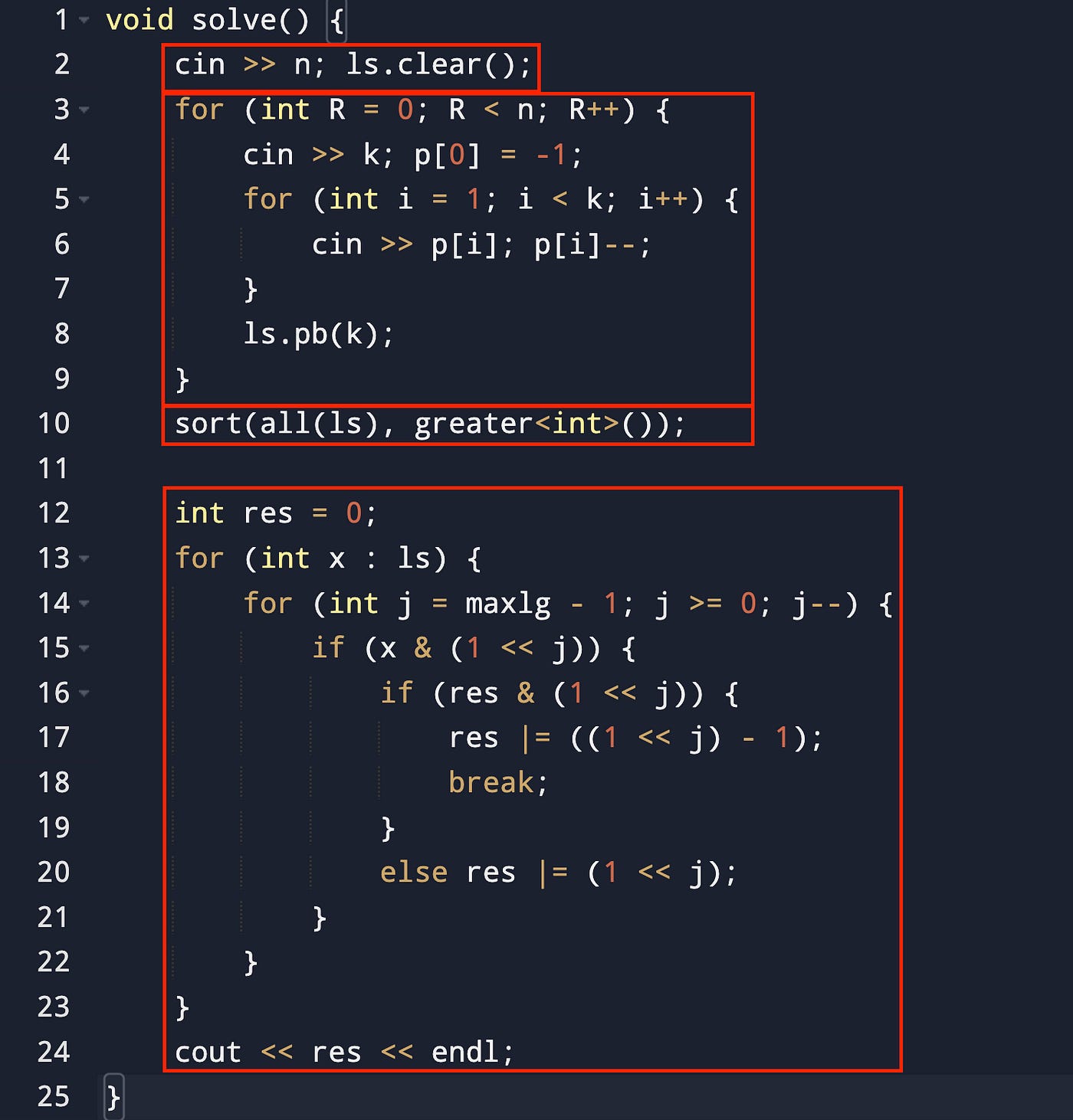

Breaking Down The Complex Code Into Independent Pieces

A large piece of code may look daunting at first, so I start by breaking the code into smaller independent pieces.

Every statement, expression, and block of code is an independent component that can be analyzed separately. Therefore, the first step is to examine the method or the script or the code block and identify these distinct components.

Each independent part identified becomes a subtask. These subtasks should then be “recursively” solved for time complexity.

When solution to all these sub-parts have been identified, they can be combined together to form the answer for the larger code.

Specifically, if we are computing the Big-O complexity, the Big-O complexity of the entire code is the largest Big-O complexity of all the sub-parts.

Solving For Loops and Dependent Nested Loops

Breaking the code into independent pieces and solving them individually works well until we hit loops and nested loops. This is where things start becoming interesting.

If the number of times the outermost loop runs, in worst case, is fixed and is not influenced by the code contained in loop’s body then the calculation is simple. I recursively find out the time complexity of the loop’s body and multiply it with the number of times the loop execute!

But life is not always this simple. Consider the following sample code and try to compute its time complexity.

I recommend you to analyse the code and come up with an answer before moving on.

The correct answer is O(N). Well done if you calculated that correctly!

The key is to analyse the code and not jump to a quick & incorrect conclusion that ‘the outer loop will run for N iterations’. In fact, the outer loop will only have two iterations.

A wide range of possibilities can arise when loop variables are updated inside nested loops. While there is no general formula for solutions to such problems, I generally start by following outer loop for 2–3 iterations specifically focusing on nested loops that update the outer loop’s variable. In this way, I grasp how many iterations of outer loop will take place.

Let us take another example.

Let us try to run a few iterations for the outer-loop. We begin with i=n. During the first iteration, the inner loop will run for n iterations until ibecomes 2n. Since the condition for the outer loop is i < n*n, we will continue with the second iteration. In the second iteration, the inner loop will again run n times until i becomes 3n.

Did you start seeing the pattern?

The outer loop is run only when i is n, 2n, 3n, …, (n-1)*n. Thus the outer loop will run only n times.

It is also clear that the inner loop will run n times in each iteration of the outer loop. Thus, assuming the complexity of code contained inside the inner loop to be O(1), the overall complexity can be stated as O(n)*O(n) = O(n^2).

Solving for the Time Complexity of Expressions and Statements

If an expression or statement is composed only up of atomic operations, it is a constant time operation.

// Some constant time operations

int p = q + r;

double c_square = a * a + b * b;

cin >> x;

res |= (1<<j);

p--;When an expression or statement contains method calls, it is important to analyse those methods before concluding the complexity of the complete expression/statement.

Consider the following example.

Pause for a moment and try to find the time complexity for the expression above!

Since the expression contains function calls, I will begin by first finding out the complexity of the function f1. This function consists of three independent parts of which the first and the third one are constant time operations. For the second part, I observe that the body of the loop is of constant time complexity and the loop runs for x iterations. Thus the overall complexity of the function turns out to be O(x).

When performing an asymptotic analysis of a function call, the complexities of computing the outermost function and its arguments add up.

Based on the observation above, let us first calculate the complexity of the inner most function call i.e., f1(n). As noted earlier, the Big-O complexity for this method call will be O(n) and its return value will be of the order of O(n*n).

Next we will calculate the time complexity for the outermost function call. Since the input to the outermost function is of the order of O(n*n) and the function has the complexity of O(x) where x is the size of input, it is clear that the overall complexity of the outermost function call is O(n*n).

And since for our given statement there are no other operations that takes more than constant time, the overall complexity of the statement is O(n^2).

💡 Most often an expression contains calls to library defined methods that are not directly available for complexity analysis. While it is not possible to memorise the complexity of each and every available method, we should be aware of the data structures and algorithm behind popular interfaces. This will help us estimate the complexity of those methods during interviews.

Complexity Analysis When Dealing With Conditionals

The idea of analysing conditionals is same as that of any other piece of code. You analyse different branches of the conditionals independently and later on combine the results together.

So if I am calculating for the worst time complexity, I would focus on the slowest branch. On the other hand, if I am calculating for the best time complexity, I would consider the fastest of all the branches.

BONUS: Analysing Recursive Code for Complexity

One of the most difficult programs to analyse for time complexities are the recursive programs. This is because many a time the actual complexity of a recursive function is wildly different from a single iteration for that function.

The formal way of calculating the complexities for recursive functions is to use the Master Theorem which is stated as follows.

T(n) = aT(n/b) + f(n),

where,

n = size of input

a = number of subproblems in the recursion

n/b = size of each subproblem. All subproblems are assumed to have the

same size.

f(n) = cost of the work done outside the recursive call, which includes

the cost of dividing the problem and cost of merging the solutions

T(n) has the following asymptotic bounds:

1. If f(n) = O(nlogb a-ϵ), then T(n) = Θ(nlogb a).

2. If f(n) = Θ(nlogb a), then T(n) = Θ(nlogb a * log n).

3. If f(n) = Ω(nlogb a+ϵ), then T(n) = Θ(f(n)).

ϵ > 0 is a constant.While the Master Theorem is the most recommended method of calculating the complexities of recursive methods, it is often difficult to remember and use specially in an interview setting where you might not have access to the internet to verify the theorem.

When solving for such problem, I generally opt for the tree-diagram method.

In the tree-diagram method, each call to the recursive function is denoted by a tree node. The value on the node denotes the size of the input. Parent-child relationship between the nodes is used to denote the recursive calls made. Here is an example of the tree-diagram for fibonacci recursive code.

Once I have created the tree diagram, I ask myself these three questions ~

What is the height of the tree when the root has the label of n?

What is the relation between number of nodes and the tree level?

What is the largest label of a node at any level?

Once I find the answer to these questions, the problem of finding the worst case time complexity reduces to a simple arithmetic problem.

f(n) = cost of the work done outside the recursive call, which includes

the cost of dividing the problem and cost of merging the solutions

complexity = O(1)

for tree_level in 0 to max_height(n):

count = nodes_at_level(tree_level)

size = largest_node_value_at_level(tree_level)

complexity += count * f(size)I have denoted the calculation in pseudo-code but trust me, the calculation on paper is really straightforward.

A solid understanding of computer science fundamentals is a hallmark of a strong candidate. Great developers not only have this knowledge but also possess the ability to analyze their code effectively. Without thorough analysis, even the best code cannot reach its full potential for improvement.

If you reached this far, Congratulations! If you enjoyed reading this article consider liking and leaving a comment. This would greatly help me as a creator!